Given current developments in the use of Artificial Intelligence (AI) in coding, it is clear that AI coding systems are no longer seen as mere tools to assist with routine tasks. They are now acting as autonomous contributors to productivity, operating within production pipelines and effectively writing business logic. This often leads to increased AI security risks, as can be seen when using an agentic coding tool such as Anthropic’s Claude Code. This rise has led to frequent breaches of traditional security models. The purpose of this article is to explain why AI-generated code typically breaks traditional security models and offer practical guidance on improving code security.

The Impact of Claude Code on Security Standards

Several security standards, such as the Open Worldwide Application Security Project’s Software Assurance Maturity Model (OWASP SAMM), the International Organization for Standardization/International Electrotechnical Commission (ISO/IEC) 27034’s Application Security, and the National Institute of Standards and Technology’s Secure Software Development Framework (NIST SSDF), were built around human agency. However, this has changed as Claude-generated code and other AI-related outputs are challenging these foundations in several ways, as explained below.

- Loss of author accountability: AI code, including that generated by Claude Code, generally lacks the key attributes traditionally associated with responsible software development.This phenomenon creates compliance blind spots in the organization’s development processes, including:

- Non-traceable decision rationales

- A lack of threat-model awareness

- No formal review context and processes

- Business logic that is not context-aware

- A lack of secure design intent

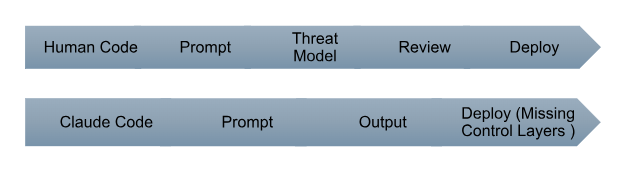

Figure 1. Human Code vs. Claude Code

- Probabilistic logic and non-deterministic risk: Claude Code often produces certain scenarios that undermine static rule-based security principles. This, in turn, makes reproducibility difficult and complicates an organization’s code auditing process. Scenarios that are likely to happen include the following:

- Variants of similar functions across files

- Inconsistent validation patterns

- Divergent error handling

- Dependency injection without governance: It should be noted that, unlike developers, who rely on human intuition, AI has no awareness of internal approved dependency lists. It therefore frequently imports undesirable elements into its operations. It can also perform executions without appropriate governance processes. These include the following:

- Obscure packages

- Deprecated libraries

- Vulnerable transitive chains

- Unpinned versions

- Standards assume secure intent: Another key aspect is that secure coding standards rely on deliberate safe choices. AI, on the other hand, optimizes for functional success rather than secure design. This misalignment tends to widen the architectural risk involved when using Claude Code. However, this risk can sometimes be mitigated by enforcing AI-specific guardrails.

Claude Code Security Best Practices

There are several Claude Code security best practices that organizations can adopt to minimize risk. These include the following:

- Enforce AI-specific software development life cycle (SDLC) controls: It is crucial to ensure these controls are implemented and enforced. This approach should incorporate the following;

- Mandatory tagging of AI-generated commits

- Separate review workflows for AI outputs

- AI-origin metadata embedded in repositories

- Dedicated Static Application Security Testing (SAST) profiles for AI code

- Harden prompt governance: Because prompts influence the existing architecture, they also have an overall influence on effectiveness. It follows, therefore, that using weak prompts will result in the generation of insecure logic. Fortunately, a variety of measures can be used to harden the organization’s prompt governance processes. These include the following:

- Prohibiting prompts requesting bypasses or shortcuts

- Enforcing secure prompt templates

- Maintaining prompt audit logs

- Classifying prompts as security-relevant artifacts

- Require deterministic dependency control: Implementing deterministic dependency control measures is necessary to operationalize Claude Code security best practices. This typically entails the adoption of the following measures:

- Lockfiles enforced pre-merge

- Software Bill of Materials (SBOM) generation per AI commit

- Auto-block non-approved packages

- Continuous Common Vulnerabilities and Exposures (CVE) drift scanning

- Inject security guardrails into AI workflows: Security guardrails are a new control measure in AI development workflows. They should therefore be implemented as part of Claude Code security best practices. The injection of security guardrails into the organization’s workflows should usually entail the following:

- Pre-prompt threat reminders (designed to assume hostile inputs)

- Security linting of AI outputs

- Runtime policy enforcement

- Auto-regeneration if risky patterns are detected

How to Adapt Security Models for AI-Driven Development

Traditional trust boundaries collapse when AI writes both backend and client code. This is especially true when this is done from the same prompt. Fortunately, there are many ways to ensure that security models adapt to AI-driven development environments. Such measures include the following:

- Shift from code trust to output verification: In a shift from code trust to output verification, an organization’s AI security program should assume that:

- AI outputs are untrusted.

- Every function is externally influenced.

- Business logic abuse is likely.

- Privilege escalation paths may be hidden.

- Explicitly add AI to threat models: It is crucial to add threat actor categories as they arise and treat AI systems as distinct threat actors. For example, one can add a non-deterministic code generator with no contextual awareness. What should then follow is a thorough process to assess risks across the entire AI ecosystem, including the following:

- Injection amplification

- Authentication drift

- Authorization misapplication

- Secret leakage patterns

- Unsafe serialization defaults

- Redefine secure code ownership: Code ownership usually determines whether code is secure. As a result, ownership must shift from the developer to the team and, eventually, to the organization. This is done to enhance maximum security; AI code often requires the following in its management:

- Centralized approval authority

- Enhanced audit trails

- Machine-assisted review layers

- Control over model interaction scope

Rethinking Security in the Age of AI Coding

Another key aspect crucial to enhancing Claude Code security is to continue to rethink security in the current age of AI coding. This is because nowadays, AI-generated code is not needed just for faster development. It also creates a new set of software risks that must be appropriately analyzed and managed.

Why Traditional Controls Fail

The use of traditional controls to address AI risks has often led to undesirable results. This is because the operating environments are largely incompatible. This, therefore, would require different approaches. The table below illustrates the most common reasons why traditional controls differ from those in an AI environment.

Table 1: Traditional Assumptions vs. AI Reality

| Traditional Assumption | AI Reality |

| Developer understands threats | AI optimizes for correctness |

| Code is reviewed in a linear fashion | AI outputs are massive and opaque |

| Dependencies are chosen intentionally | Dependencies are implicitly decided |

| Patterns are consistent throughout | Patterns vary in an unpredictable way |

Emerging Claude Code Security Risks

Claude introduces a variety of code security risks into an organization’s operating environments. These emerging AI code risks include:

- Silent authentication bypass patterns.

- Over-permissive Application Programming Interface (API) exposure.

- Hidden injection sinks.

- Embedded secrets in configurations.

- Logic flaws across chained functions.

- Reintroduction of patched vulnerabilities.

- Insecure fallback behavior.

Figure 2: Claude: Secure Code, Insecure Practices

The Governance Imperative

There should be principles for overall security governance in an organization that uses AI coding assistants. As a starting point, AI-generated code should not be treated in isolation; it should be treated the same as third-party software produced internally by the organization. This is because Claude’s code:

- Requires verification, not trust.

- Requires controls, not assumptions.

- Requires monitoring, not just reviews.

As a result, security teams that fail to adapt to a new governance imperative, such as performing AI code security checks, are likely to see an increase in risk. Such risks may include the following:

- Increased breach likelihood

- Audit failures

- Supply chain risks

- Compliance challenges

FAQ

- How do security risks in Claude Code differ from traditional code?

Claude-generated code lacks human threat awareness at both the generation and operation stages. Unlike traditional code, which is tied to developer intent, AI outputs are probabilistic. As a result, they are harder to audit and prone to hidden vulnerabilities. This is true across business logic and architecture layers within an organization. - What are examples of security incidents involving AI-generated code?

Security incidents involving AI-generated code include production systems exposing API keys, authentication mechanisms being bypassed due to insecure authentication logic, and exploitation of insecure deserialization paths in deployed applications. Several security operations in organizations have also flagged AI-written components that passed functional testing but failed security validation. - What types of vulnerabilities are most common in AI-produced code?

Common vulnerabilities in AI-generated code include injection flaws, vulnerable open-source imports, broken access controls, and hardcoded secrets. Improper input validation and dependency-based vulnerabilities are also frequently reported. All these weaknesses can affect the effective operation of AI code, hence leading to poor results. - Can traditional code review processes catch issues in Claude Code outputs?

Yes. Traditional code review processes can catch issues in Claude Code’s output. However, they can only do this partially and typically struggle with volume, inconsistency, and hidden logic risks that characterize AI outputs. Without AI-specific scanning, traditional code reviewers often miss architectural flaws and dependency risks that are introduced automatically by the Claude model.

Conclusion

The article examined the various reasons why AI-generated code breaks traditional security models using Claude Code as a reference point. It touched on key aspects, including the impact of Claude Code on security standards, its security risks, and best practices. It concluded by explaining why traditional measures fail to address AI-generated code and by providing guidance on adapting security models to AI-driven development environments. By following these guidelines, organizations can ensure strong Claude code development environments leading to increased productivity.

Useful References

- National Institute of Standards and Technology. (2022). Secure software development framework (SSDF) version 1.1 (NIST Special Publication 800-218). U.S. Department of Commerce. https://csrc.nist.gov/pubs/sp/800/218/final

- Anthropic. (n.d.). Claude documentation: Security, limitations, and intended use. https://code.claude.com/docs/en/security

- Pearce, H., Ahmed, M., et al (2022). Asleep at the keyboard? Assessing the security of AI-generated code contributions. University of Illinois. https://gangw.cs.illinois.edu/class/cs562/papers/copilot-sp22.pdf

- OWASP Foundation. (2023). OWASP top 10 for large language model applications. https://owasp.org/www-project-top-10-for-large-language-model-applications/