Introduction

With the rapid rise in the use of Artificial Intelligence (AI)-powered automation tools, employees across organizations are now building applications on their own. While this democratization of innovation accelerates productivity, it also introduces significant risks. This article explores one of these risks, Shadow AI, in detail: what it is, why it matters now, and the primary risks it poses. It also examines how Shadow AI evades security visibility and how organizations can regain visibility and control without stifling innovation.

What Is Shadow AI and Why Does It Matter?

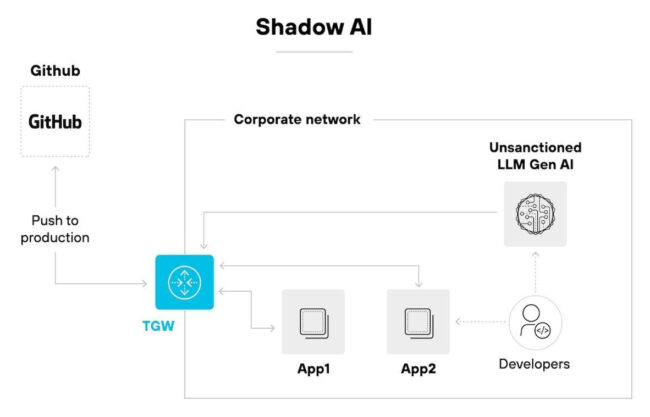

Shadow AI refers to AI tools, models, or applications that are developed, deployed, and used within an organization without adhering to, or being subject to, the organization’s security governance processes. These tools may be created by business users, developers, or external vendors, often for efficiency and convenience rather than compliance. Briefly, Shadow AI can include:

- AI-powered workflow automations that are built on low-code or no-code platforms.

- Unapproved use of generative AI models connected to internal organizational data.

- Custom scripts and bots leveraging public AI application programming interfaces (APIs).

- Embedded AI features within third-party Software-as-a-Service (SaaS) tools that bypass internal security reviews.

Figure 1 shows what Shadow AI entails.

Figure 1: Shadow AI

(Source: https://www.paloaltonetworks.com/cyberpedia/what-is-shadow-ai)

The key point is that shadow IT is not usually created with malicious intent. In most cases, teams are simply trying to solve problems faster and become more efficient and effective in undertaking their tasks. However, when AI systems operate outside established controls, organizations can lose visibility into how data is handled across operations, thereby posing security risks. What makes Shadow AI risks particularly urgent is the nature of AI itself. Unlike traditional applications, AI systems often:

- Continuously learn from data.

- Make autonomous or semi-autonomous decisions.

- Integrate deeply with multiple data sources.

- Operate in an opaque manner, making behavior difficult to explain or audit.

Main Risks Posed by Shadow AI in Modern Organizations

If the growth of AI-built applications is left unchecked, it can introduce strategic, operational, and other risks to the organizations involved. The major risks associated with shadow IT in modern organizations include:

- Data exposure and privacy violations: Unauthorized AI tools, such as Shadow AI, rely on sensitive organizational data to function effectively. Such issues are part of the broader Shadow AI risks, particularly in regulated industries. Data exposure and privacy violations pose the following risks:

- Transfer of confidential data to external AI providers

- Unlawful processing of personally identifiable information (PII)

- Violation of residency and sovereignty requirements

- Lack of security visibility: Security teams in most organizations rely on inventories, logging, and monitoring to manage risk. As a result, when incidents occur, security investigators may not even be aware that AI-driven processes were involved, because Shadow AI operates outside these controls. This often results in:

- Unknown applications accessing internal systems

- Incomplete audit trails

- Gaps in security monitoring and alerting

- Regulatory non-compliance

- Model and logic integrity risks: Shadow AI can produce flawed outputs that directly affect business decisions. Its use can also affect customer interactions and the effective operation of automated controls. AI-built applications may also pose the following risks:

- Unvalidated models

- Biased and/or low-quality training data

- Insecure prompt logic

- Insecure decision rules

- Compliance and legal exposure: Regulatory frameworks, such as the European Union Artificial Intelligence Act (EU AI Act), increasingly require transparency and accountability for AI use. As global AI regulations mature, Shadow AI is rapidly becoming a significant compliance liability, leading to the following:

- Circumventing formal risk assessments

- Bypassing documentation

- Bypassing approval workflows

- Making it impossible to demonstrate compliance during audits

How to Audit, Discover, and Control Shadow AI Usage

Addressing Shadow AI within an organization should be a holistic, systematic process that encompasses the following elements:

- Gaining visibility into all AI tools: The audit process should begin with comprehensive visibility into all AI tools before proceeding to prohibit them. This defines the audit universe. Organizations first understand where and how AI is used across the entity and the specific use cases for which it is applied.

- Establish enterprise-wide discovery mechanisms: The organization should proceed with the Shadow AI detection process. This process should not be treated as surveillance but as awareness. Effective Shadow AI detection should consist of a combination of technical and organizational measures, including the following:

- Network traffic analysis to identify AI API usage

- Cloud access security broker (CASB) capabilities to discover unapproved AI tools

- Application inventories that include AI functionality

- Surveys and structured engagement with business units

- Classify and prioritize AI use cases: Organizations should recognize that not all Shadow AI poses the same level of risk. Some Shadow AI may actually be harmless if not useful. Prioritizing AI use cases enables security and governance teams to focus resources on Shadow AI with the highest risk. Once discovered, Shadow AI tools should be categorized based on several variables, including:

- Data sensitivity

- Business criticality

- Level of autonomy

- External dependencies

- Integrate AI into existing risk processes: The other key step is to integrate AI into existing risk processes. This integration ensures AI does not remain an exception to established controls and is not overlooked. AI usage should be incorporated into organization-wide monitoring processes, including:

- Vendor risk assessments

- Secure development lifecycle (SDLC) reviews

- Data protection impact assessments

- Incident response planning sessions

- Provide secure alternatives: One reason Shadow AI proliferates in most organizations is the lack of approved options for employee use. If pre-approved options are already available, this can reduce unapproved experimentation without slowing innovation. Organizations should therefore provide the following to control Shadow AI:

- Pre-approved AI platforms

- Secure internal AI services

- Clear guidance on acceptable use

Building an Effective Governance Framework for AI Tools

Security leaders should recognize that sustainable control of Shadow AI requires governance that balances risk management and business agility. The following guidelines outline how to build an effective governance framework for AI tools:

- Define clear AI usage policies: AI acceptable-use policies should be written in plain language and communicated throughout the organization. These policies should specifically address the following:

- Approved and prohibited AI use cases

- Data types that may or may not be used with AI tools

- Requirements for external AI services

- Accountability for AI-driven outcomes

- Establish cross-functional oversight: Effective Shadow AI security should broaden ownership beyond security teams. This helps ensure that decisions reflect both risk and value. Governance of Shadow AI should involve:

- Information security

- Legal and compliance

- Data protection

- Business leadership

- Internal audit

- Embed governance into workflows: Embedding governance into organizational workflows reduces friction and encourages compliance by design. This entails incorporating the following into security governance:

- Lightweight approval workflows for new AI tools

- Standardized risk checklists

- Automated controls where possible

- Automated monitoring

- Invest in AI literacy: An informed workforce is one of the most effective ways to counter Shadow AI. Employees should be aware of appropriate Shadow AI security practices. Therefore, training programs should cover the following, among other key aspects:

- Responsible AI principles

- Data handling expectations

- Risks of unapproved AI tools

- How to request and deploy AI responsibly

FAQs

How should companies handle requests from business units for unapproved AI tool integrations?

Organizations should implement clear AI intake and review processes. These processes should ensure that AI risk is comprehensively evaluated alongside data use and the corresponding business value. There should also be fast, transparent approvals for AI tools in the workplace, paired with secure alternatives to reduce the incentive for employees to bypass controls while maintaining innovation.

Can Shadow AI affect incident response or investigations during breaches?

Yes. Shadow AI can obscure timelines, data flows, and decision logic during incident investigations. This may, in turn, complicate root cause analysis, which is critical to incident management. This may lead to delays in incident containment, with incidents eventually affecting processes, thereby increasing both operational and regulatory impact.

What role do third-party vendors play in expanding Shadow AI risk?

Vendors are increasingly embedding AI features into their products either by design or by default. Organizations, in turn, may not be fully aware of these features, especially without rigorous vendor risk assessments. As a result, they may unknowingly adopt AI capabilities that process sensitive data outside approved governance frameworks, thereby increasing risk.

How might Shadow AI influence digital transformation strategies?

Shadow AI can accelerate short-term transformation by enabling employees to innovate. This may lead to increased efficiency, raising productivity. However, in the long term, Shadow AI tends to undermine sustainability because of the associated risks. Therefore, only those organizations that align AI adoption with security governance are best positioned to achieve scalable innovation.

Conclusion

Shadow AI is not a future problem to be addressed later; it is a present reality affecting all organizations. The impact of its risks depends on how it is managed and governed within an organization. Organizations that acknowledge its existence, invest in visibility, and implement pragmatic governance processes benefit from both worlds. They can reduce risk while enabling responsible, scalable AI innovation. Security leaders should therefore ensure that shadow IT is effectively managed to reduce risk profiles.

Useful References

- ISACA. (2025). The rise of Shadow AI: Auditing unauthorized AI tools in the enterprise. https://www.isaca.org/resources/news-and-trends/industry-news/2025/the-rise-of-shadow-ai-auditing-unauthorized-ai-tools-in-the-enterprise

- Lasso Security. (2024). What is Shadow AI? Risks, tools, and best practices for 2025. https://www.lasso.security/blog/what-is-shadow-ai

- European Union Agency for Cybersecurity (ENISA). (2023). Threat landscape for artificial intelligence. https://www.enisa.europa.eu/publications/threat-landscape-for-artificial-intelligence

- National Institute of Standards and Technology. (2023). AI risk management framework (AI RMF 1.0). U.S. Department of Commerce. https://www.nist.gov/itl/ai-risk-management-framework

- McKinsey & Company. (2023). The state of AI in 2023: Generative AI’s breakout year. https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai-in-2023

- Palo Alto Networks. (n.d.). What is Shadow AI? How it happens and what to do about it. https://www.paloaltonetworks.com/cyberpedia/what-is-shadow-ai