AI agents are not just chatbots with better manners. They plan, call tools, and read and write data. Sometimes they even chain tasks across systems you already struggle to inventory.

That is why AI agent security is now a practical engineering problem, not a future research debate. The moment an agent can act, it becomes part of your attack surface.

What Is AI Agent Security?

AI agent security is the set of controls that keep an agentic system safe while it reasons and acts. It covers classic pillars such as identity, access control, and logging, as well as agent-specific risks, including tool misuse, memory poisoning, and prompt injection.

A helpful way to define the boundary is this: A model produces text; an agent produces outcomes. The second can change your environment. IBM frames it as protecting against risks of using agents and threats to agentic applications.

The Agentic AI Threat Landscape

Agentic systems are typically connected by default. They sit between users and sensitive systems, translating requests into actions. That creates a few recurring threat patterns:

- Indirect attacks in which malicious content rides along inside documents, web pages, tickets, or emails that the agent reads

- Cross-tool abuse, where a safe-looking request becomes unsafe when chained with a tool call

- Multi-step goal hijacking, where an attacker nudges the agent, step by step, into doing something it should not do

- Supply chain-style risks where a plugin, tool definition, or workflow update silently expands privileges

OWASP’s work on LLM application risks provides useful context, as many agent issues begin as classic LLM issues and worsen when tools are added.

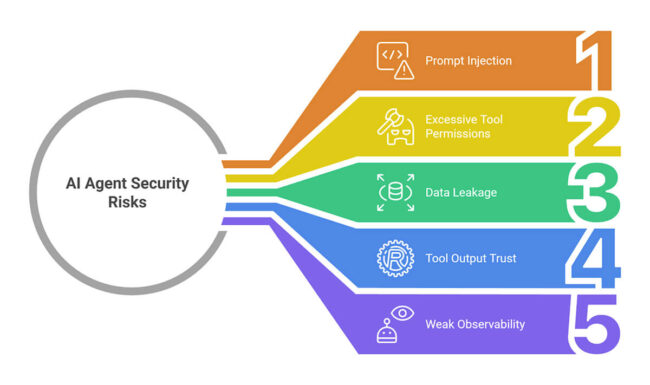

Common AI Agent Vulnerabilities and Attack Vectors

Here are the big ones security teams keep running into:

1. Prompt injection and instruction smuggling

Attackers hide instructions within data that the agent is allowed to read, hoping the agent treats it as a system directive. OWASP lists prompt injection as a top risk category for LLM applications.

2. Excessive tool permissions

An agent with broad read/write access can do real damage, either by accident or through manipulation. This is where agentic AI security is closer to identity and access management than most teams expect.

3. Data leakage through context and memory

Agents may store conversation history, summaries, embeddings, or task memory. If that memory is poisoned or shared among users, one session can affect another.

4. Tool output trust

If a tool returns untrusted data, the agent may treat it as truth and act on it-for example, consider a compromised internal API response or a malicious webpage scrape.

5. Weak observability

If you cannot reconstruct what the agent saw, decided, and executed, you cannot investigate incidents or demonstrate compliance. This is a common gap in early deployments.

Essential AI Agent Security Measures and Best Practices

Start with controls that are boring and effective:

Harden identities and secrets

- Use short-lived tokens.

- Store secrets in a managed vault, never in prompts or tool descriptions.

Constrain tools

- Allowlist tools per agent role.

- Validate tool inputs and outputs.

- Rate limit tool calls and cap spend, time, and retries.

Isolate execution

- Sandbox high-risk tools such as shell commands, code execution, and web browsing.

- Segment networks so the agent cannot laterally move.

Make decisions inspectable

- Log tool calls, parameters, results, and final actions.

- Keep a tamper-resistant audit trail.

Test like an attacker

- Run adversarial prompts and dataset-based red teaming aligned to OWASP guidance.

If you want an organizing framework for AI risk work, NIST’s AI RMF and its generative AI profile are widely used in enterprise programs.

How to Implement Zero Trust Architecture for AI Agents

Zero trust for agents means you never assume the agent is safe, even if it is internal, authenticated, or built by you. Every request is evaluated as if it could be malicious.

Practical steps:

- Verify explicitly: Authenticate the agent, the user, and each tool call, not just the initial session.

- Least privilege by default: Give the agent only the minimum scopes it needs for the current task, and expire them fast.

- Assume breach: Design so that a compromised agent cannot directly reach crown jewel systems.

- Continuous evaluation: Recheck the policy on every sensitive action, especially writes, approvals, and external communications

This is where many teams adopt agentic security solutions that sit around the agent as a policy and monitoring layer, similar to how API gateways wrap services. Vendors describe unified discovery, protection, and governance across AI interactions and agents, aligning with the direction many enterprises are moving.

FAQs

1. What makes AI agents more vulnerable than traditional AI models?

AI agents can take actions, not just generate text. They call tools, access data stores, and chain multi-step plans. This expands the attack surface from a single prompt to an entire workflow, including APIs, permissions, memory, and external content the agent reads.

2. Can an AI agent in security prevent prompt injection attacks?

It can reduce risk, but prevention is not a single switch. Strong defenses combine input filtering, separation of system instructions from untrusted data, allowlisting of tools, least privilege, and monitoring. Prompt injection remains a top risk category in the OWASP guidance, so treat it as ongoing engineering work.

3. What is the principle of least privilege in AI agent security?

Least privilege means the agent receives only the minimum access needed for the task, for the shortest possible time. For example, read-only access to a single folder rather than an entire drive, or the ability to draft an email before sending it. This limits the blast radius if the agent is tricked or fails.

4. How does zero-trust architecture protect AI agents?

Zero trust treats every action as suspicious until proven safe. The agent must authenticate to each tool, pass policy checks, and operate within strict scopes. Even if the agent is compromised, segmentation and continuous authorization can prevent it from accessing sensitive systems or performing high-impact writes.

5. What role does data encryption play in securing AI agents?

Encryption protects data in transit and at rest, reducing exposure if traffic is intercepted or storage is accessed unlawfully. It is especially important for agent memory, logs, and embeddings that may contain sensitive context. Encryption does not prevent prompt injection, but it helps limit secondary damage and leakage.