Vibe coding makes software development feel fast, fun, and almost effortless. You describe an outcome, an AI tool writes the code, and you ship a prototype in hours. But security does not automatically keep pace with that speed. Vibe coding security is about keeping those rapid builds safe enough to run without turning your app into an open door.

What Is Vibe Coding Security?

Vibe coding is a style of AI-assisted development in which a developer (or even a non-developer) describes a task in plain language, and the model generates working code. The term is often associated with Andrej Karpathy and the idea that the builder becomes more of a curator than an author.

Vibe coding security is the set of checks, guardrails, and habits that reduce the risk of shipping insecure code in that workflow. It matters because the code can look correct and run correctly, yet still be dangerously permissive.

The Rise of Vibe Coding and AI-Generated Code

Vibe coding has moved beyond engineering teams. Many modern code-generation tools help people go from prompt to app quickly, increasing the number of new applications and new attack surfaces.

Security teams are watching this shift closely for one simple reason. Code is being produced faster than traditional review and testing can keep pace with, especially when teams treat AI output as trusted by default.

Common Vibe Coding Security Vulnerabilities

Most risks are not exotic. They are the same classic bugs, just introduced at higher volume and sometimes with less scrutiny.

Common issues include:

- Broken authentication and missing authorization checks on routes and APIs.

- Injection flaws, such as SQL injection, due to unsafe query building and weak input validation.

- Hardcoded secrets, keys, or tokens copied into source code or client bundles.

- Insecure dependency choices, including installing packages that were never vetted.

A big pattern is that the app works, so it ships. The security gaps are invisible until someone probes them.

Why AI-Generated Code Creates Unique Security Challenges

AI-generated code introduces failure modes that feel human but occur at machine speed.

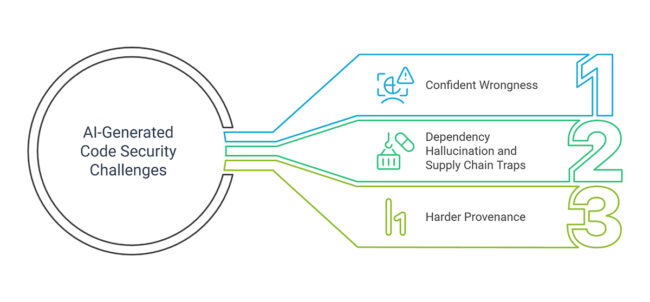

Three problems show up normally:

1. Confident wrongness

The model can generate code that looks plausible yet lacks key checks or uses unsafe defaults.

2. Dependency hallucination and supply chain traps

Sometimes the model suggests packages that do not exist. Attackers can register those names and wait for someone to install them. This practice, known as slopsquatting, is being discussed as an emerging supply chain risk tied to AI hallucinations.

3. Harder provenance

When code is generated from prompts, the rationale behind a snippet can be unclear unless teams track how it was produced. Some security practitioners are beginning to argue for lightweight provenance, similar in spirit to an SBOM, but for AI-generated output.

There is also growing research that identifies real security weaknesses in AI-attributed code in public repositories through static analysis and CWE mapping.

Security Best Practices for Vibe Coding Workflows

Vibe coding does not need heavy restrictions to be safe. It needs quick, repeatable guardrails that keep pace with AI-generated development.

A practical baseline:

- Treat AI output like junior code: Review it, test it, and assume it can be insecure.

- Lock down auth early: Centralize authentication and require authorization checks for every sensitive action.

- Add automated scanning in CI: Use SAST and dependency scanning and fail builds on high-severity findings.

- Control dependencies: Allowlist trusted packages, pin versions, and review new libraries before installation.

- Protect secrets: Use a secret manager, block secrets from commits, and continuously scan repositories.

- Defend against slopsquatting: Verify every suggested package name, publisher, and download history before adding it.

If you want a simple mental model, think of guidance over gates. Make the secure path the easiest, so fast shipping does not mean blind shipping.

FAQs

1. What makes vibe coding different from traditional development?

Traditional development assumes that the engineer writes most of the code and understands its structure. Vibe coding shifts the work toward prompting, assembling, and iterating on AI-generated output. The speed is higher, but the review burden also increases because the code may be accepted without deep inspection.

2. How common are vulnerabilities in AI-generated code?

Evidence from large-scale analyses of AI-attributed code in public repositories, using static analysis tooling, shows thousands of CWE instances across many vulnerability types. The exact rate varies by dataset and method, but the takeaway is clear: insecure patterns appear at a meaningful scale.

3. Can vibe coding be used safely in production environments?

Yes, but only with guardrails. Production use requires code review, automated security scanning, strong auth patterns, and strict dependency controls. The risk comes from skipping those steps, even if the output looks polished. Treat AI-generated code as untrusted until proven otherwise.

4. What is slopsquatting in vibe coding?

Slopsquatting is a supply chain attack in which an AI model suggests a non-existent package name, and an attacker registers it to distribute malicious content. It exploits dependency hallucinations and the habit of copy-paste installation. Verification and allowlists reduce the risk.

5. How can organizations secure vibe coding without slowing development?

Focus on automation and defaults. Put dependency scanning and SAST in CI, use templates with secure auth, and maintain a trusted package list. Add fast feedback so developers get guidance while coding, not after release. That maintains velocity while reducing preventable mistakes.